Overview

We propose a soft attention based model for the task of action recognition in videos. We use multi-layered Recurrent Neural Networks (RNNs) with Long-Short Term Memory (LSTM) units which are deep both spatially and temporally. Our model learns to focus selectively on parts of the video frames and classifies videos after taking a few glimpses. The model essentially learns which parts in the frames are relevant for the task at hand and attaches higher importance to them. We evaluate the model on UCF-11 (YouTube Action), HMDB-51 and Hollywood2 datasets and analyze how the model focuses its attention depending on the scene and the action being performed.

Attention mechanism and the model

(a) Attention mechanism: The Convolutional Neural Network (GoogLeNet) takes a video frame as its input and produces a feature cube which has features from different spatial locations. The mechanism then computes xt, the current input for the model, as a dot product of the feature cube and the location softmax lt obtained as shown in (b). Notation and further details are explained in the paper.

(b) Recurrent model: At each time-step t, our recurrent neural network (RNN) takes a feature slice xt, generated by the attention mechanism, as the input. It then propagates xt through three layers of Long Short-Term Memory (LSTM) units and predicts the next location probabilities lt+1 and the class label yt. Check out the paper for further details.

Publications

Action Recognition using Visual Attention [

arXiv |

PDF |

BibTeX ]

Shikhar Sharma, Ryan Kiros, Ruslan Salakhutdinov

International Conference on Learning Representations (ICLR) Workshop, 2016

Action Recognition using Visual Attention [

PDF |

BibTeX |

Poster ]

Shikhar Sharma, Ryan Kiros, Ruslan Salakhutdinov

NIPS Time Series Workshop, 2015

Related Publication

Action Recognition and Video Description using Visual Attention [

PDF |

BibTeX ]

Shikhar Sharma

Masters Thesis, University of Toronto, February 2016

Code

Visualizations

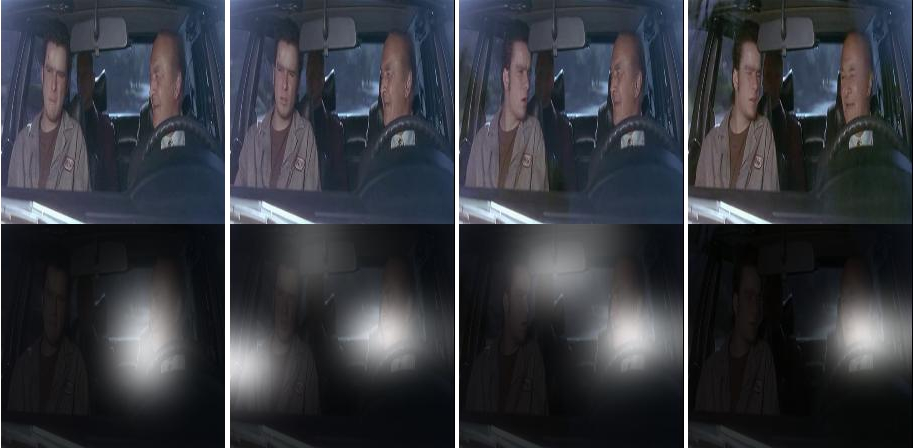

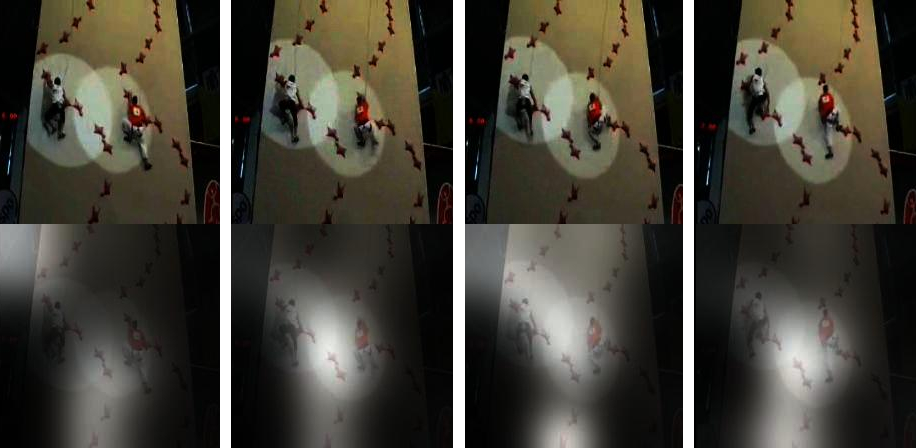

Figure 1: The above GIFs show the attention of our model over time for some videos from UCF-11. The white regions are where the model is looking and the brightness indicates the strength of focus. The model learns to look at the relevant parts.

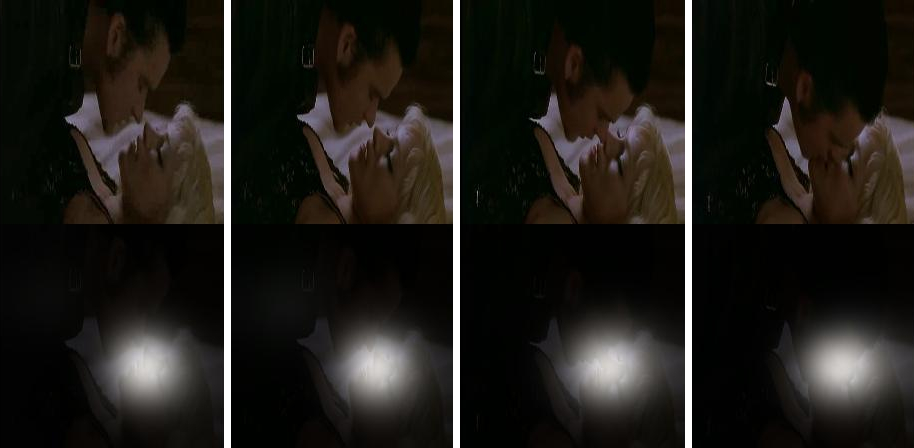

Figure 2: The above images show the attention of our model over time for a few examples from Hollywood2 and HMDB-51 datasets. The white regions are where the model is looking and the brightness indicates the strength of focus. We see that in (a) the model learns to attend to the driver, the steering wheel and the rearview mirror to classify the example correctly. In (b) the model attends to the space between the man and the woman's mouth. The model, however, is not perfect. There are examples such as in (c) where the model looks at irrelevant parts and classifies the example incorrectly. There are also examples such as (d) where the model attends to the ball and the happening kick yet classifies the example incorrectly as "somersault".

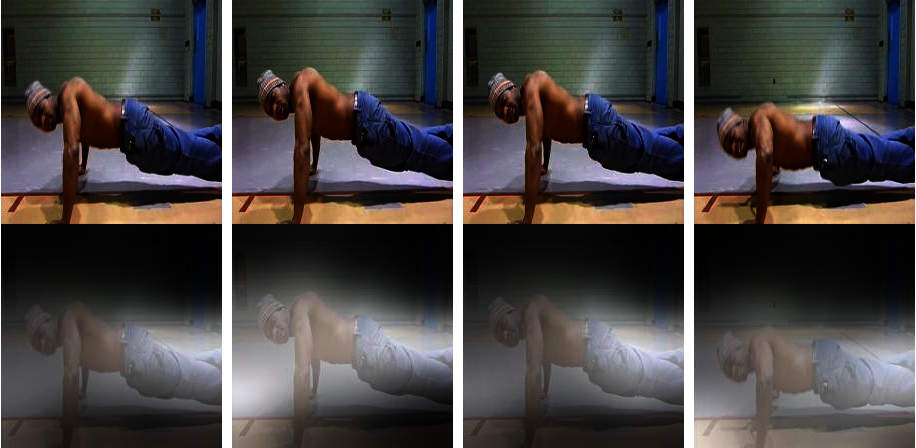

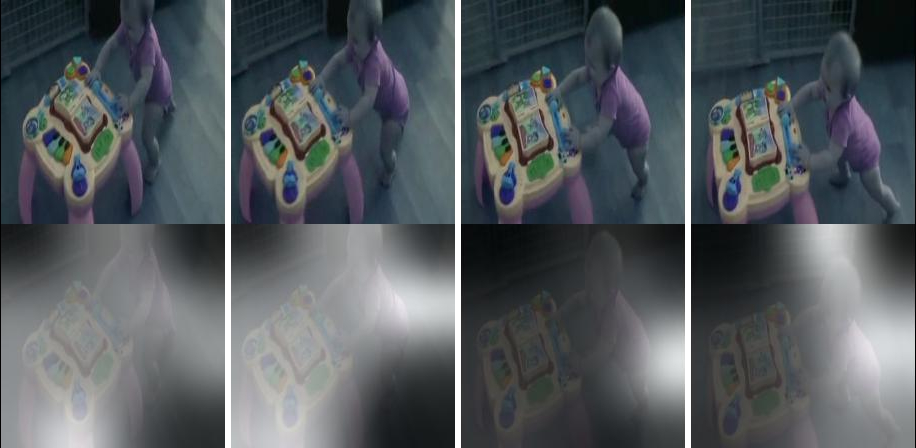

Figure 3: The above images show the attention of our model over time for some more examples predicted correctly by our model.

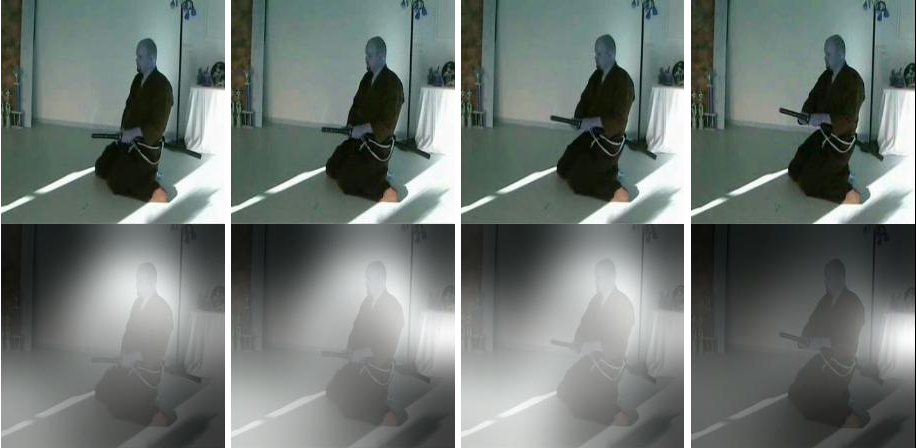

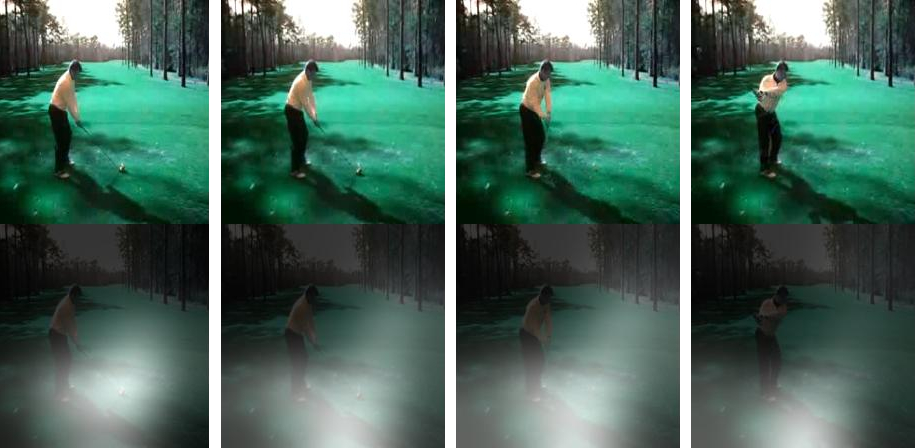

Figure 4: The above images show the attention of our model over time for some more examples predicted incorrectly by our model.

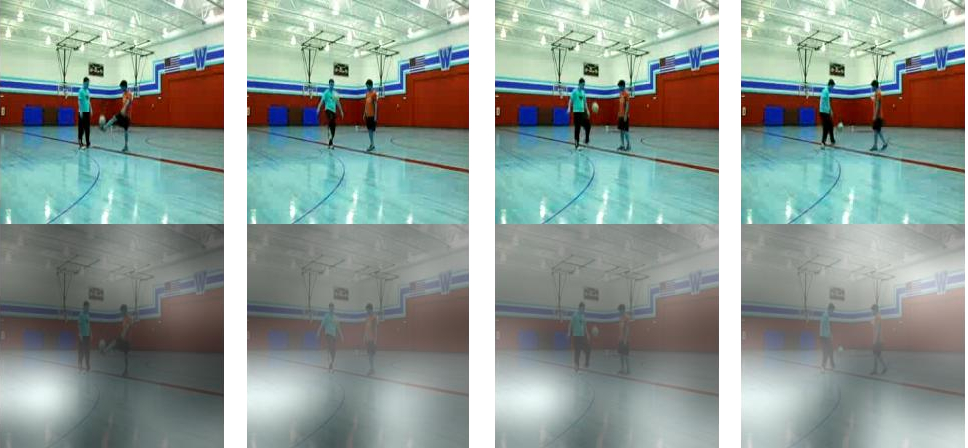

(a) λ = 0

(b) λ = 1

(c) λ = 10

Figure 5: : The above images show that we can control the variation in the model’s attention depending on the value of the attention penalty λ. The white regions are where the model is looking and the brightness indicates the strength of focus. Setting λ = 0 corresponds to the model that tends to select a few locations and stay fixed on them. Setting λ = 10 forces the model to gaze everywhere.

Contact

shikhar [at] cs [dot] toronto [dot] edu